MongoDB Driver Specifications

The modern MongoDB driver consists of a number of components, each of which are thoroughly documented in this repository. Though this information is readily available and extremely helpful, what it lacks is a high level overview to tie the specs together into a cohesive picture of what a MongoDB driver is.

Architecturally an implicit hierarchy exists within the drivers, so expressing drivers in terms of an onion model feels appropriate.

Layers of the Onion

The “drivers onion” is meant to represent how various concepts, components and APIs can be layered atop each other to build a MongoDB driver from the ground up, or to help understand how existing drivers have been structured. Hopefully this representation of MongoDB’s drivers helps provide some clarity, as the complexity of these libraries - like the onion above - could otherwise bring you to tears.

Serialization

At their lowest level all MongoDB drivers will need to know how to work with BSON. BSON (short for “Binary JSON”) is a binary-encoded serialization of JSON-like documents, and like JSON, it supports the nesting of arrays and documents. BSON also contains extensions that allow representation of data types that are not part of the JSON spec.

Specifications: BSON, ObjectId, Decimal128, UUID, DBRef, Extended JSON

Communication

Once BSON documents can be created and manipulated, the foundation for interacting with a MongoDB host process has been laid. Drivers communicate by sending database commands as serialized BSON documents using MongoDB’s wire protocol.

From the provided connection string and options a socket connection is established to a host, which an initial handshake verifies is in fact a valid MongoDB connection by sending a simple hello. Based on the response to this first command a driver can continue to establish and authenticate connections.

Specifications:

OP_MSG, Command Execution, Connection String, URI Options, OCSP, Initial Handshake, Wire Compression, SOCKS5, Initial DNS Seedlist Discovery

Connectivity

Now that a valid host has been found, the cluster’s topology can be discovered and monitoring connections can be established. Connection pools can then be created and populated with connections. The monitoring connections will subsequently be used for ensuring operations are routed to available hosts, or hosts that meet certain criteria (such as a configured read preference or acceptable latency window).

Specifications: SDAM, CMAP, Load Balancer Support

Authentication

Establishing and monitoring connections to MongoDB ensures they’re available, but MongoDB server processes typically will require the connection to be authenticated before commands will be accepted. MongoDB offers many authentication mechanisms such as SCRAM, x.509, Kerberos, LDAP, OpenID Connect and AWS IAM, which MongoDB drivers support using the Simple Authentication and Security Layer (SASL) framework.

Specifications: Authentication

Availability

All client operations will be serialized as BSON and sent to MongoDB over a connection that will first be checked out of a connection pool. Various monitoring processes exist to ensure a driver’s internal state machine contains an accurate view of the cluster’s topology so that read and write requests can always be appropriately routed according to MongoDB’s server selection algorithm.

Specifications: Server Monitoring,

SRVPolling for mongos Discovery, Server Selection, Max Staleness

Resilience

At their core, database drivers are client libraries meant to facilitate interactions between an application and the database. MongoDB’s drivers are no different in that regard, as they abstract away the underlying serialization, communication, connectivity, and availability functions required to programmatically interact with your data.

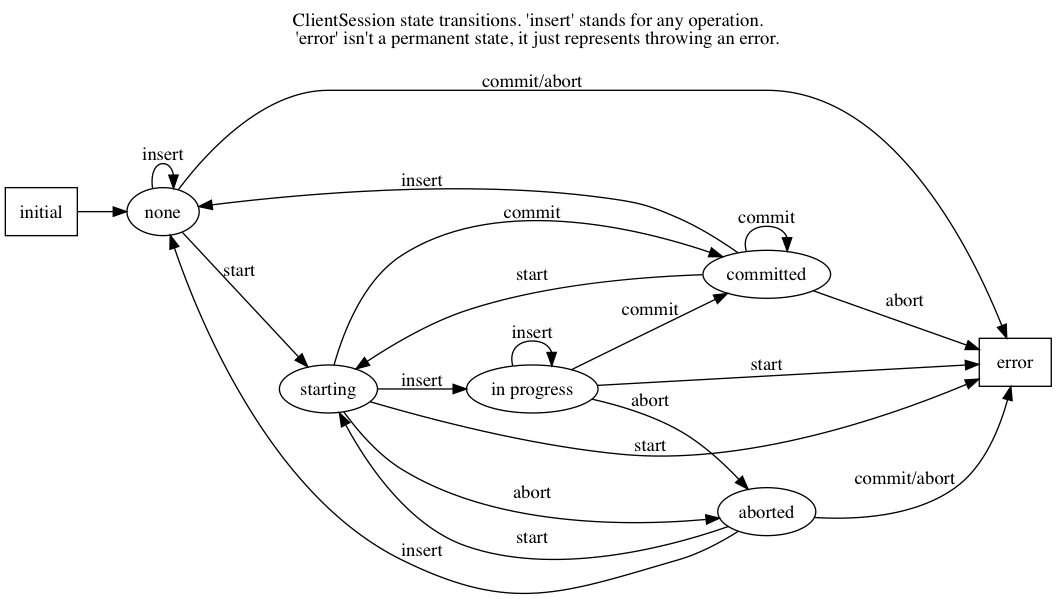

To further enhance the developer experience while working with MongoDB, various resilience features can be added based on logical sessions such as retryable writes, causal consistency, and transactions.

Specifications: Retryability (Reads, Writes), CSOT, Consistency (Sessions, Causal Consistency, Snapshot Reads, Transactions, Convenient Transactions API)

Programmability

Now that we can serialize commands and send them over the wire through an authenticated connection we can begin actually manipulating data. Since all database interactions are in the form of commands, if we wanted to remove a single document we might issue a delete command such as the following:

db.runCommand(

{

delete: "orders",

deletes: [ { q: { status: "D" }, limit: 0 } ]

}

)

Though not exceedingly complex, a better developer experience can be achieved through more single-purpose APIs. This would allow the above example to be expressed as:

db.orders.deleteMany({ status: "D" })

To provide a cleaner and clearer developer experience, many specifications exist to describe how these APIs should be consistently presented across driver implementations, while still providing the flexibility to make APIs more idiomatic for each language.

Advanced security features such as client-side field level encryption are also defined at this layer.

Specifications: Resource Management (Databases, Collections, Indexes), Data Management (CRUD, Collation, Write Commands, Bulk API, Bulk Write, R/W Concern), Cursors (Change Streams,

find/getMore/killCursors), GridFS, Stable API, Security (Client Side Encryption, BSON Binary Subtype 6)

Observability

With database commands being serialized and sent to MongoDB servers and responses being received and deserialized, our driver can be considered fully functional for most read and write operations. As MongoDB drivers abstract away most of the complexity involved with creating and maintaining the connections these commands will be sent over, providing mechanisms for introspection into a driver’s functionality can provide developers with added confidence that things are working as expected.

The inner workings of connection pools, connection lifecycle, server monitoring, topology changes, command execution and other driver components are exposed by means of events developers can register listeners to capture. This can be an invaluable troubleshooting tool and can help facilitate monitoring the health of an application.

const { MongoClient, BSON: { EJSON } } = require('mongodb');

function debugPrint(label, event) {

console.log(`${label}: ${EJSON.stringify(event)}`);

}

async function main() {

const client = new MongoClient("mongodb://localhost:27017", { monitorCommands: true });

client.on('commandStarted', (event) => debugPrint('commandStarted', event));

client.on('connectionCheckedOut', (event) => debugPrint('connectionCheckedOut', event));

await client.connect();

const coll = client.db("test").collection("foo");

const result = await coll.findOne();

client.close();

}

main();

Given the example above (using the Node.js driver) the specified connection events and command events would be logged as they’re emitted by the driver:

connectionCheckedOut: {“time”:{“$date”:“2024-05-17T15:18:18.589Z”},“address”:“localhost:27018”,“name”:“connectionCheckedOut”,“connectionId”:1}

commandStarted: {“name”:“commandStarted”,“address”:“127.0.0.1:27018”,“connectionId”:1,“serviceId”:null,“requestId”:5,“databaseName”:“test”,“commandName”:“find”,“command”:{“find”:“foo”,“filter”:{},“limit”:1,“singleBatch”:true,“batchSize”:1,“lsid”:{“id”:{“$binary”:{“base64”:“4B1kOPCGRUe/641MKhGT4Q==”,“subType”:“04”}}},“$clusterTime”:{“clusterTime”:{“$timestamp”:{“t”:1715959097,“i”:1}},“signature”:{“hash”:{“$binary”:“base64”:“AAAAAAAAAAAAAAAAAAAAAAAAAAA=”,“subType”:“00”}},“keyId”:0}},“$db”:“test”},“serverConnectionId”:140}

The preferred method of observing internal behavior would be through standardized logging once it is available in all drivers (DRIVERS-1204), however until that time only event logging is consistently available. In the future additional observability tooling such as Open Telemetry support may also be introduced.

Specifications: Command Logging and Monitoring, SDAM Logging and Monitoring, Standardized Logging, Connection Pool Logging

Testability

Ensuring existing as well as net-new drivers can be effectively tested for correctness and performance, most specifications define a standard set of tests using YAML tests to improve driver conformance. This allows specification authors and maintainers to describe functionality once with the confidence that the tests can be executed alike by language-specific test runners across all drivers.

Though the unified test format greatly simplifies language-specific implementations, not all tests can be represented in this fashion. In those cases the specifications may describe tests to be manually implemented as prose. By limiting the number of prose tests that each driver must implement, engineers can deliver functionality with greater confidence while also minimizing the burden of upstream verification.

Specifications: Unified Test Format, Atlas Data Federation Testing, Performance Benchmarking, BSON Corpus, Replication Event Resilience, FAAS Automated Testing, Atlas Serverless Testing

Conclusion

Most (if not all) the information required to build a new driver or maintain existing drivers technically exists within the specifications, however without a mental mode of their composition and architecture it can be extremely challenging to know where to look.

Peeling the “drivers onion” should hopefully make reasoning about them a little easier, especially with the understanding that everything can be tested to validate individual implementations are “up to spec”.

Specification Owners

This is very loosely based on GitHub’s CODEOWNERS.

Driver Mantras

When developing specifications – and the drivers themselves – we follow the following principles:

Strive to be idiomatic, but favor consistency

Drivers attempt to provide the easiest way to work with MongoDB in a given language ecosystem, while specifications attempt to provide a consistent behavior and experience across all languages. Drivers should strive to be as idiomatic as possible while meeting the specification and staying true to the original intent.

No Knobs

Too many choices stress out users. Whenever possible, we aim to minimize the number of configuration options exposed to users. In particular, if a typical user would have no idea how to choose a correct value, we pick a good default instead of adding a knob.

Topology agnostic

Users test and deploy against different topologies or might scale up from replica sets to sharded clusters. Applications should never need to use the driver differently based on topology type.

Where possible, depend on server to return errors

The features available to users depend on a server’s version, topology, storage engine and configuration. So that drivers don’t need to code and test all possible variations, and to maximize forward compatibility, always let users attempt operations and let the server error when it can’t comply. Exceptions should be rare: for cases where the server might not error and correctness is at stake.

Minimize administrative helpers

Administrative helpers are methods for admin tasks, like user creation. These are rarely used and have maintenance costs as the server changes the administrative API. Don’t create administrative helpers; let users rely on “RunCommand” for administrative commands.

Check wire version, not server version

When determining server capabilities within the driver, rely only on the maxWireVersion in the hello response, not on the X.Y.Z server version. An exception is testing server development releases, as the server bumps wire version early and then continues to add features until the GA.

When in doubt, use “MUST” not “SHOULD” in specs

Specs guide our work. While there are occasionally valid technical reasons for drivers to differ in their behavior, avoid encouraging it with a wishy-washy “SHOULD” instead of a more assertive “MUST”.

Defy augury

While we have some idea of what the server will do in the future, don’t design features with those expectations in mind. Design and implement based on what is expected in the next release.

Case Study: In designing OP_MSG, we held off on designing support for Document Sequences in Replies in drivers until the server would support it. We subsequently decided not to implement that feature in the server.

The best way to see what the server does is to test it

For any unusual case, relying on documentation or anecdote to anticipate the server’s behavior in different versions/topologies/etc. is error-prone. The best way to check the server’s behavior is to use a driver or the shell and test it directly.

Drivers follow semantic versioning

Drivers should follow X.Y.Z versioning, where breaking API changes require a bump to X. See semver.org for more.

Backward breaking behavior changes and semver

Backward breaking behavior changes can be more dangerous and disruptive than backward breaking API changes. When thinking about the implications of a behavior change, ask yourself what could happen if a user upgraded your library without carefully reading the changelog and/or adequately testing the change.

Server Wire version and Feature List

| Server version | Wire version | Feature List |

|---|---|---|

| 2.6 | 1 |

Aggregation cursor Auth commands |

| 2.6 | 2 |

Write commands (insert/update/delete) Aggregation $out pipeline operator |

| 3.0 | 3 |

listCollections listIndexes SCRAM-SHA-1 explain command |

| 3.2 | 4 |

(find/getMore/killCursors) commands currentOp command fsyncUnlock command findAndModify take write concern Commands take read concern Document-level validation explain command supports distinct and findAndModify |

| 3.4 | 5 |

Commands take write concern Commands take collation |

| 3.6 | 6 |

Supports OP_MSG Collection-level ChangeStream support Retryable Writes Causally Consistent Reads Logical Sessions update “arrayFilters” option |

| 4.0 | 7 |

ReplicaSet transactions Database and cluster-level change streams and startAtOperationTime option |

| 4.2 | 8 |

Sharded transactions Aggregation $merge pipeline operator update “hint” option |

| 4.4 | 9 |

Streaming protocol for SDAM ResumableChangeStreamError error label delete “hint” option findAndModify “hint” option createIndexes “commitQuorum” option |

| 5.0 | 13 | $out and $merge on secondaries (technically FCV 4.4+) |

| 5.1 | 14 | |

| 5.2 | 15 | |

| 5.3 | 16 | |

| 6.0 | 17 |

Support for Partial Indexes Sharded Time Series Collections FCV set to 5.0 |

| 6.1 | 18 |

Update Perl Compatible Regular Expressions version to PCRE2 Add |

| 6.2 | 19 |

Collection validation ensures BSON documents conform to BSON spec Collection validation checks time series collections for internal consistency |

| 7.0 | 21 |

Atlas Search Index Management

Compound Wildcard Indexes Support large change stream events via

Slot Based Query Execution |

| 7.1 | 22 |

Improved Index Builds Exhaust Cursors Enabled for Sharded Clusters New Sharding Statistics for Chunk Migrations Self-Managed Backups of Sharded Clusters |

| 7.2 | 23 |

Database Validation on

Default Chunks Per Shard |

| 7.3 | 24 |

Compaction Improvements New |

| 8.0 | 25 |

Range Encryption GA OIDC authentication mechanism New

|

In server versions 5.0 and earlier, the wire version was defined as a numeric literal in src/mongo/db/wire_version.h. Since server version 5.1 (SERVER-58346), the wire version is derived from the number of releases since 4.0 (using src/mongo/util/version/releases.tpl.h and src/mongo/util/version/releases.yml).

BSON

Latest version of the specification can be found at https://bsonspec.org/spec.html.

Specification Version 1.1

BSON is a binary format in which zero or more ordered key/value pairs are stored as a single entity. We call this entity a document.

The following grammar specifies version 1.1 of the BSON standard. We’ve written the grammar using a pseudo-BNF syntax. Valid BSON data is represented by the document non-terminal.

Basic Types

The following basic types are used as terminals in the rest of the grammar. Each type must be serialized in little-endian format.

byte 1 byte (8-bits)

signed_byte(n) 8-bit, two's complement signed integer for which the value is n

unsigned_byte(n) 8-bit unsigned integer for which the value is n

int32 4 bytes (32-bit signed integer, two's complement)

int64 8 bytes (64-bit signed integer, two's complement)

uint64 8 bytes (64-bit unsigned integer)

double 8 bytes (64-bit IEEE 754-2008 binary floating point)

decimal128 16 bytes (128-bit IEEE 754-2008 decimal floating point)

Non-terminals

The following specifies the rest of the BSON grammar. Note that we use the * operator as shorthand for repetition (e.g. (byte*2) is byte byte). When used as a unary operator, * means that the repetition can occur 0 or more times.

document ::= int32 e_list unsigned_byte(0) BSON Document. int32 is the total number of bytes comprising the document.

e_list ::= element e_list

| ""

element ::= signed_byte(1) e_name double 64-bit binary floating point

| signed_byte(2) e_name string UTF-8 string

| signed_byte(3) e_name document Embedded document

| signed_byte(4) e_name document Array

| signed_byte(5) e_name binary Binary data

| signed_byte(6) e_name Undefined (value) — Deprecated

| signed_byte(7) e_name (byte*12) ObjectId

| signed_byte(8) e_name unsigned_byte(0) Boolean - false

| signed_byte(8) e_name unsigned_byte(1) Boolean - true

| signed_byte(9) e_name int64 UTC datetime

| signed_byte(10) e_name Null value

| signed_byte(11) e_name cstring cstring Regular expression - The first cstring is the regex pattern, the second is the regex options string. Options are identified by characters, which must be stored in alphabetical order. Valid option characters are i for case insensitive matching, m for multiline matching, s for dotall mode ("." matches everything), x for verbose mode, and u to make "\w", "\W", etc. match Unicode.

| signed_byte(12) e_name string (byte*12) DBPointer — Deprecated

| signed_byte(13) e_name string JavaScript code

| signed_byte(14) e_name string Symbol — Deprecated

| signed_byte(15) e_name code_w_s JavaScript code with scope — Deprecated

| signed_byte(16) e_name int32 32-bit integer

| signed_byte(17) e_name uint64 Timestamp

| signed_byte(18) e_name int64 64-bit integer

| signed_byte(19) e_name decimal128 128-bit decimal floating point

| signed_byte(-1) e_name Min key

| signed_byte(127) e_name Max key

e_name ::= cstring Key name

string ::= int32 (byte*) unsigned_byte(0) String - The int32 is the number of bytes in the (byte*) plus one for the trailing null byte. The (byte*) is zero or more UTF-8 encoded characters.

cstring ::= (byte*) unsigned_byte(0) Zero or more modified UTF-8 encoded characters followed by the null byte. The (byte*) MUST NOT contain unsigned_byte(0), hence it is not full UTF-8.

binary ::= int32 subtype (byte*) Binary - The int32 is the number of bytes in the (byte*).

subtype ::= unsigned_byte(0) Generic binary subtype

| unsigned_byte(1) Function

| unsigned_byte(2) Binary (Old)

| unsigned_byte(3) UUID (Old)

| unsigned_byte(4) UUID

| unsigned_byte(5) MD5

| unsigned_byte(6) Encrypted BSON value

| unsigned_byte(7) Compressed BSON column

| unsigned_byte(8) Sensitive

| unsigned_byte(128)—unsigned_byte(255) User defined

code_w_s ::= int32 string document Code with scope — Deprecated

Notes

- Array - The document for an array is a normal BSON document with integer values for the keys, starting with 0 and continuing sequentially. For example, the array [‘red’, ‘blue’] would be encoded as the document {‘0’: ‘red’, ‘1’: ‘blue’}. The keys must be in ascending numerical order.

- UTC datetime - The int64 is UTC milliseconds since the Unix epoch.

- Timestamp - Special internal type used by MongoDB replication and sharding. First 4 bytes are an increment, second 4 are a timestamp.

- Min key - Special type which compares lower than all other possible BSON element values.

- Max key - Special type which compares higher than all other possible BSON element values.

- Generic binary subtype - This is the most commonly used binary subtype and should be the ‘default’ for drivers and tools.

- Compressed BSON Column - Compact storage of BSON data. This data type uses delta and delta-of-delta compression and run-length-encoding for efficient element storage. Also has an encoding for sparse arrays containing missing values.

- The BSON “binary” or “BinData” datatype is used to represent arrays of bytes. It is somewhat analogous to the Java notion of a ByteArray. BSON binary values have a subtype. This is used to indicate what kind of data is in the byte array. Subtypes from 0 to 127 are predefined or reserved. Subtypes from 128 to 255 are user-defined.

- unsigned_byte(2) Binary (Old) - This used to be the default subtype, but was deprecated in favor of subtype 0. Drivers and tools should be sure to handle subtype 2 appropriately. The structure of the binary data (the byte* array in the binary non-terminal) must be an int32 followed by a (byte*). The int32 is the number of bytes in the repetition.

- unsigned_byte(3) UUID (Old) - This used to be the UUID subtype, but was deprecated in favor of subtype 4. Drivers and tools for languages with a native UUID type should handle subtype 3 appropriately.

- unsigned_byte(128)—unsigned_byte(255) User defined subtypes. The binary data can be anything.

- Code with scope - Deprecated. The int32 is the length in bytes of the entire code_w_s value. The string is JavaScript code. The document is a mapping from identifiers to values, representing the scope in which the string should be evaluated.

BSON Binary Subtype 9 - Vector

- Status: Accepted

- Minimum Server Version: N/A

Abstract

This document describes the subtype of the Binary BSON type used for efficient storage and retrieval of vectors. Vectors here refer to densely packed arrays of numbers, all of the same type.

Motivation

These representations correspond to the numeric types supported by popular numerical libraries for vector processing, such as NumPy, PyTorch, TensorFlow and Apache Arrow. Storing and retrieving vector data using the same densely packed format used by these libraries can result in significant memory savings and processing efficiency.

META

The keywords “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119.

Specification

This specification introduces a new BSON binary subtype, the vector, with value 9.

Drivers SHOULD provide idiomatic APIs to translate between arrays of numbers and this BSON Binary specification.

Data Types (dtypes)

Each vector can take one of multiple data types (dtypes). The following table lists the dtypes implemented.

| Vector data type | Alias | Bits per vector element | Arrow Data Type (for illustration) |

|---|---|---|---|

0x03 | INT8 | 8 | INT8 |

0x27 | FLOAT32 | 32 | FLOAT |

0x10 | PACKED_BIT | 1 * | BOOL |

* A Binary Quantized (PACKED_BIT) Vector is a vector of 0s and 1s (bits), but it is represented in memory as a list of

integers in [0, 255]. So, for example, the vector [0, 255] would be shorthand for the 16-bit vector

[0,0,0,0,0,0,0,0,1,1,1,1,1,1,1,1]. The idea is that each number (a uint8) can be stored as a single byte. Of course,

some languages, Python for one, do not have an uint8 type, so must be represented as an int in memory, but not on disk.

Byte padding

As not all data types have a bit length equal to a multiple of 8, and hence do not fit squarely into a certain number of bytes, a second piece of metadata, the “padding” is included. This instructs the driver of the number of bits in the final byte that are to be ignored. The least-significant bits are ignored.

Binary structure

Following the binary subtype 9, a two-element byte array of metadata precedes the packed numbers.

-

The first byte (dtype) describes its data type. The table above shows those that MUST be implemented. This table may increase. dtype is an unsigned integer.

-

The second byte (padding) prescribes the number of bits to ignore in the final byte of the value. It is a non-negative integer. It must be present, even in cases where it is not applicable, and set to zero.

-

The remainder contains the actual vector elements packed according to dtype.

All values use the little-endian format.

Example

Let’s take a vector [238, 224] of dtype PACKED_BIT (\x10) with a padding of 4.

In hex, it looks like this: b"\x10\x04\xee\xe0": 1 byte for dtype, 1 for padding, and 1 for each uint8.

We can visualize the binary representation like so:

| 1st byte: dtype (from list in previous table) | 2nd byte: padding (values in [0,7]) | 1st uint8: 238 | 2nd uint8: 224 | ||||||||||||||||||||||||||||

| 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 |

Finally, after we remove the last 4 bits of padding, the actual bit vector has a length of 12 and looks like this!

| 1 | 1 | 1 | 0 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 0 |

|---|

API Guidance

Drivers MUST implement methods for explicit encoding and decoding that adhere to the pattern described below while following idioms of the language of the driver.

Encoding

Function from_vector(vector: Iterable<Number>, dtype: DtypeEnum, padding: Integer = 0) -> Binary

# Converts a numeric vector into a binary representation based on the specified dtype and padding.

# :param vector: A sequence or iterable of numbers (either float or int)

# :param dtype: Data type for binary conversion (from DtypeEnum)

# :param padding: Optional integer specifying how many bits to ignore in the final byte

# :return: A binary representation of the vector

Declare binary_data as Binary

# Process each number in vector and convert according to dtype

For each number in vector

binary_element = convert_to_binary(number, dtype)

binary_data.append(binary_element)

End For

# Apply padding to the binary data if needed

If padding > 0

apply_padding(binary_data, padding)

End If

Return binary_data

End Function

Note: If a driver chooses to implement a Vector type (or numerous) like that suggested in the Data Structure

subsection below, they MAY decide that from_vector that has a single argument, a Vector.

Decoding

Function as_vector() -> Vector

# Unpacks binary data (BSON or similar) into a Vector structure.

# This process involves extracting numeric values, the data type, and padding information.

# :return: A BinaryVector containing the unpacked numeric values, dtype, and padding.

Declare binary_vector as BinaryVector # Struct to hold the unpacked data

# Extract dtype (data type) from the binary data

binary_vector.dtype = extract_dtype_from_binary()

# Extract padding from the binary data

binary_vector.padding = extract_padding_from_binary()

# Unpack the actual numeric values from the binary data according to the dtype

binary_vector.data = unpack_numeric_values(binary_vector.dtype)

Return binary_vector

End Function

Validation

Drivers MUST validate vector metadata and raise an exception if any invariant is violated:

- When unpacking binary data into a FLOAT32 Vector structure, the length of the binary data following the dtype and padding MUST be a multiple of 4 bytes.

- Padding MUST be 0 for all dtypes where padding doesn’t apply, and MUST be within [0, 7] for PACKED_BIT.

- A PACKED_BIT vector MUST NOT be empty if padding is in the range [1, 7].

- For a PACKED_BIT vector with non-zero padding, ignored bits SHOULD be zero.

- When encoding, if ignored bits aren’t zero, drivers SHOULD raise an exception, but drivers MAY leave them as-is if backwards-compatibility is a concern.

- When decoding, drivers SHOULD raise an exception if decoding non-zero ignored bits, but drivers MAY choose not to for backwards compatibility.

- Drivers SHOULD use the next major release to conform to ignored bits being zero.

- For a PACKED_BIT vector with non-zero padding, ignored bits SHOULD be zero.

Drivers MUST perform this validation when a numeric vector and padding are provided through the API, and when unpacking binary data (BSON or similar) into a Vector structure.

Data Structures

Drivers MAY find the following structures to represent the dtype and vector structure useful.

Enum Dtype

# Enum for data types (dtype)

# FLOAT32: Represents packing of list of floats as float32

# Value: 0x27 (hexadecimal byte value)

# INT8: Represents packing of list of signed integers in the range [-128, 127] as signed int8

# Value: 0x03 (hexadecimal byte value)

# PACKED_BIT: Special case where vector values are 0 or 1, packed as unsigned uint8 in range [0, 255]

# Packed into groups of 8 (a byte)

# Value: 0x10 (hexadecimal byte value)

# Documentation:

# Each value is a byte (length of one), a convenient choice for decoding.

End Enum

Struct Vector

# Numeric vector with metadata for binary interoperability

# Fields:

# data: Sequence of numeric values (either float or int)

# dtype: Data type of vector (from enum BinaryVectorDtype)

# padding: Number of bits to ignore in the final byte for alignment

data # Sequence of float or int

dtype # Type: DtypeEnum

padding # Integer: Number of padding bits

End Struct

Reference Implementation

- PYTHON (PYTHON-4577)

Test Plan

See the README for tests.

FAQ

-

What MongoDB Server version does this apply to?

- Files in the “specifications” repository have no version scheme. They are not tied to a MongoDB server version.

-

In PACKED_BIT, why would one choose to use integers in [0, 256)?

- This follows a well-established precedent for packing binary-valued arrays into bytes (8 bits), This technique is widely used across different fields, such as data compression, communication protocols, and file formats, where you want to store or transmit binary data more efficiently by grouping 8 bits into a single byte (uint8). For an example in Python, see numpy.unpackbits.

-

In PACKED_BIT, why are ignored bits recommended to be zero?

- To ensure the same data representation has the same encoding. For drivers supporting comparison operations, this avoids comparing different unused bits.

Changelog

-

2025-06-23: In PACKED_BIT vectors, ignored bits MAY be zero for backwards-compatibility. Prose tests added.

-

2025-04-08: In PACKED_BIT vectors, ignored bits must be zero.

-

2025-03-07: Update tests to use Extended JSON representation of +/-Infinity. (DRIVERS-3095)

-

2025-02-04: Update validation for decoding into a FLOAT32 vector.

-

2024-11-01: BSON Binary Subtype 9 accepted DRIVERS-2926 (#1708)

BSON ObjectID

- Status: Accepted

- Minimum Server Version: N/A

Abstract

This specification documents the format and data contents of ObjectID BSON values that the drivers and the server

generate when no field values have been specified (e.g. creating an ObjectID BSON value when no _id field is present

in a document). It is primarily aimed to provide an alternative to the historical use of the MD5 hashing algorithm for

the machine information field of the ObjectID, which is problematic when providing a FIPS compliant implementation. It

also documents existing best practices for the timestamp and counter fields.

META

The keywords “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119.

Specification

The ObjectID BSON type is a 12-byte value consisting of three different portions (fields):

- a 4-byte value representing the seconds since the Unix epoch in the highest order bytes,

- a 5-byte random number unique to a machine and process,

- a 3-byte counter, starting with a random value.

4 byte timestamp 5 byte process unique 3 byte counter

|<----------------->|<---------------------->|<------------>|

[----|----|----|----|----|----|----|----|----|----|----|----]

0 4 8 12

Timestamp Field

This 4-byte big endian field represents the seconds since the Unix epoch (Jan 1st, 1970, midnight UTC). It is an ever increasing value that will have a range until about Jan 7th, 2106.

Drivers MUST create ObjectIDs with this value representing the number of seconds since the Unix epoch.

Drivers MUST interpret this value as an unsigned 32-bit integer when conversions to language specific date/time values are created, and when converting this to a timestamp.

Drivers SHOULD have an accessor method on an ObjectID class for obtaining the timestamp value.

Random Value

A 5-byte field consisting of a random value generated once per process. This random value is unique to the machine and process.

Drivers MUST NOT have an accessor method on an ObjectID class for obtaining this value.

The random number does not have to be cryptographic. If possible, use a PRNG with OS supplied entropy that SHOULD NOT block to wait for more entropy to become available. Otherwise, seed a deterministic PRNG to ensure uniqueness of process and machine by combining time, process ID, and hostname.

Counter

A 3-byte big endian counter.

This counter MUST be initialised to a random value when the driver is first activated. After initialisation, the counter MUST be increased by 1 for every ObjectID creation.

When the counter overflows (i.e., hits 16777215+1), the counter MUST be reset to 0.

Drivers MUST NOT have an accessor method on an ObjectID class for obtaining this value.

The random number does not have to be cryptographic. If possible, use a PRNG with OS supplied entropy that SHOULD NOT block to wait for more entropy to become available. Otherwise, seed a deterministic PRNG to ensure uniqueness of process and machine by combining time, process ID, and hostname.

Test Plan

Drivers MUST:

- Ensure that the Timestamp field is represented as an unsigned 32-bit representing the number of seconds since the

Epoch for the Timestamp values:

0x00000000: To match"Jan 1st, 1970 00:00:00 UTC"0x7FFFFFFF: To match"Jan 19th, 2038 03:14:07 UTC"0x80000000: To match"Jan 19th, 2038 03:14:08 UTC"0xFFFFFFFF: To match"Feb 7th, 2106 06:28:15 UTC"

- Ensure that the Counter field successfully overflows its sequence from

0xFFFFFFto0x000000. - Ensure that after a new process is created through a fork() or similar process creation operation, the “random number unique to a machine and process” is no longer the same as the parent process that created the new process.

Motivation for Change

Besides the specific exclusion of MD5 as an allowed hashing algorithm, the information in this specification is meant to align the ObjectID generation algorithm of both drivers and the server.

Design Rationale

Timestamp: The timestamp is a 32-bit unsigned integer, as it allows us to extend the furthest date that the timestamp can represent from the year 2038 to 2106. There is no reason why MongoDB would generate a timestamp to mean a date before 1970, as MongoDB did not exist back then.

Random Value: Originally, this field consisted of the Machine ID and Process ID fields. There were numerous divergences between drivers due to implementation choices, and the Machine ID field traditionally used the MD5 hashing algorithm which can’t be used on FIPS compliant machines. In order to allow for a similar behaviour among all drivers and the MongoDB Server, these two fields have been collated together into a single 5-byte random value, unique to a machine and process.

Counter: The counter makes it possible to have multiple ObjectIDs per second, per server, and per process. As the counter can overflow, there is a possibility of having duplicate ObjectIDs if you create more than 16 million ObjectIDs per second in the same process on a single machine.

Endianness: The Timestamp and Counter are big endian because we can then use memcmp to order ObjectIDs, and we

want to ensure an increasing order.

Backwards Compatibility

This specification requires that the existing Machine ID and Process ID fields are merged into a single 5-byte value. This will change the behaviour of ObjectID generation, as well as the behaviour of drivers that currently have getters and setters for the original Machine ID and Process ID fields.

Reference Implementation

Currently there is no full reference implementation yet.

Changelog

-

2024-07-30: Migrated from reStructuredText to Markdown.

-

2022-10-05: Remove spec front matter and reformat changelog.

-

2019-01-14: Clarify that the random numbers don’t need to be cryptographically secure. Add a test to test that the unique value is different in forked processes.

-

2018-10-11: Clarify that the Timestamp and Counter fields are big endian, and add the reason why.

-

2018-07-02: Replaced Machine ID and Process ID fields with a single 5-byte unique value

-

2018-05-22: Initial Release

BSON Decimal128

- Status: Accepted

- Minimum Server Version: 3.4

Abstract

MongoDB 3.4 introduces a new BSON type representing high precision decimal ("\x13"), known as Decimal128. 3.4

compatible drivers must support this type by creating a Value Object for it, possibly with accessor functions for

retrieving its value in data types supported by the respective languages.

Round-tripping Decimal128 types between driver and server MUST not change its value or representation in any way. Conversion to and from native language types is complicated and there are many pitfalls to represent Decimal128 precisely in all languages

While many languages offer a native decimal type, the precision of these types often does not exactly match that of the

MongoDB implementation. To ensure error-free conversion and consistency between official MongoDB drivers, this

specification does not allow automatically converting the BSON Decimal128 type into a language-defined decimal type.

Language drivers will wrap their native type in value objects by default and SHOULD offer accessor functions for

retrieving its value represented by language-defined types if appropriate. A driver that offers the ability to configure

mappings to/from BSON types to native types MAY allow the option to automatically convert the BSON Decimal128 type to

a native type. It should however be made abundantly clear to the user that converting to native data types risks

incurring data loss.

META

The keywords “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119.

Terminology

IEEE 754-2008 128-bit decimal floating point (Decimal128)

The Decimal128 specification supports 34 decimal digits of precision, a max value of approximately 10^6145, and min

value of approximately -10^6145. This is the new BSON Decimal128 type ("\x13").

Clamping

Clamping happens when a value’s exponent is too large for the destination format. This works by adding zeros to the coefficient to reduce the exponent to the largest usable value. An overflow occurs if the number of digits required is more than allowed in the destination format.

Binary Integer Decimal (BID)

MongoDB uses this binary encoding for the coefficient as specified in IEEE 754-2008 section 3.5.2 using method 2

“binary encoding” rather than method 1 “decimal encoding”. The byte order is little-endian, like the rest of the BSON

types.

Value Object

An immutable container type representing a value (e.g. Decimal128). This Value Object MAY provide accessors that

retrieve the abstracted value as a different type (e.g. casting it). double x = valueObject.getAsDouble();

Specification

BSON Decimal128 implementation details

The BSON Decimal128 data type implements the

Decimal Arithmetic Encodings specification, with certain exceptions

around value integrity and the coefficient encoding. When a value cannot be represented exactly, the value will be

rejected.

The coefficient MUST be stored as an unsigned binary integer (BID) rather than the densely-packed decimal (DPD) shown in

the specification. See either the IEEE Std 754-2008 spec or the driver examples for further detail.

The specification defines several statuses which are meant to signal exceptional circumstances, such as when overflowing occurs, and how to handle them.

BSON Decimal128 Value Objects MUST implement these actions for these exceptions:

-

Overflow

- When overflow occurs, the operation MUST emit an error and result in a failure

-

Underflow

- When underflow occurs, the operation MUST emit an error and result in a failure

-

Clamping

- Since clamping does not change the actual value, only the representation of it, clamping MUST occur without emitting an error.

-

Rounding

- When the coefficient requires more digits then Decimal128 provides, rounding MUST be done without emitting an error, unless it would result in inexact rounding, in which case the operation MUST emit an error and result in a failure.

-

Conversion Syntax

- Invalid strings MUST emit an error and result in a failure.

It should be noted that the given exponent is a preferred representation. If the value cannot be stored due to the value

of the exponent being too large or too small, but can be stored using an alternative representation by clamping and or

rounding, a BSON Decimal128 compatible Value Object MUST do so, unless such operation results in an inexact rounding

or other underflow or overflow.

Reading from BSON

A BSON type "\x13" MUST be represented by an immutable Value Object by default and MUST NOT be automatically converted

into language native numeric type by default. A driver that offers users a way to configure the exact type mapping to

and from BSON types MAY allow the BSON Decimal128 type to be converted to the user configured type.

A driver SHOULD provide accessors for this immutable Value Object, which can return a language-specific representation

of the Decimal128 value, after converting it into the respective type. For example, Java may choose to provide

Decimal128.getBigDecimal().

All drivers MUST provide an accessor for retrieving the value as a string. Drivers MAY provide other accessors, retrieving the value as other types.

Serializing and writing BSON

Drivers MUST provide a way of constructing the Value Object, as the driver representation of the BSON Decimal128 is an

immutable Value Object by default.

A driver MUST have a way to construct this Value Object from a string. For example, Java MUST provide a method similar

to Decimal128.valueOf("2.000").

A driver that has accessors for different types SHOULD provide a way to construct the Value Object from those types.

Reading from Extended JSON

The Extended JSON representation of Decimal128 is a document with the key $numberDecimal and a value of the Decimal128

as a string. Drivers that support Extended JSON formatting MUST support the $numberDecimal type specifier.

When an Extended JSON $numberDecimal is parsed, its type should be the same as that of a deserialized

BSON Decimal128, as described in Reading from BSON.

The Extended JSON $numberDecimal value follows the same stringification rules as defined in

From String Representation.

Writing to Extended JSON

The Extended JSON type identifier is $numberDecimal, while the value itself is a string. Drivers that support

converting values to Extended JSON MUST be able to convert its Decimal128 value object to Extended JSON.

Converting a Decimal128 Value Object to Extended JSON MUST follow the conversion rules in To String Representation, and other stringification rules as when converting Decimal128 Value Object to a String.

Operator overloading and math on Decimal128 Value Objects

Drivers MUST NOT allow any mathematical operator overloading for the Decimal128 Value Objects. This includes adding two Decimal128 Value Objects and assigning the result to a new object.

If a user wants to perform mathematical operations on Decimal128 Value Objects, the user must explicitly retrieve the native language value representations of the objects and perform the operations on those native representations. The user will then create a new Decimal128 Value Object and optionally overwrite the original Decimal128 Value Object.

From String Representation

For finite numbers, we will use the definition at https://speleotrove.com/decimal/daconvs.html. It has been modified to account for a different NaN representation and whitespace rules and copied here:

Strings which are acceptable for conversion to the abstract representation of

numbers, or which might result from conversion from the abstract representation

to a string, are called numeric strings.

A numeric string is a character string that describes either a finite

number or a special value.

* If it describes a finite number, it includes one or more decimal digits,

with an optional decimal point. The decimal point may be embedded in the

digits, or may be prefixed or suffixed to them. The group of digits (and

optional point) thus constructed may have an optional sign ('+' or '-')

which must come before any digits or decimal point.

* The string thus described may optionally be followed by an 'E'

(indicating an exponential part), an optional sign, and an integer

following the sign that represents a power of ten that is to be applied.

The 'E' may be in uppercase or lowercase.

* If it describes a special value, it is one of the case-independent names

'Infinity', 'Inf', or 'NaN' (where the first two represent infinity and

the second represent NaN). The name may be preceded by an optional sign,

as for finite numbers.

* No blanks or other whitespace characters are permitted in a numeric string.

Formally

sign ::= '+' | '-'

digit ::= '0' | '1' | '2' | '3' | '4' | '5' | '6' | '7' |

'8' | '9'

indicator ::= 'e' | 'E'

digits ::= digit [digit]...

decimal-part ::= digits '.' [digits] | ['.'] digits

exponent-part ::= indicator [sign] digits

infinity ::= 'Infinity' | 'Inf'

nan ::= 'NaN'

numeric-value ::= decimal-part [exponent-part] | infinity

numeric-string ::= [sign] numeric-value | [sign] nan

where the characters in the strings accepted for 'infinity' and 'nan' may be in

any case. If an implementation supports the concept of diagnostic information

on NaNs, the numeric strings for NaNs MAY include one or more digits, as shown

above.[3] These digits encode the diagnostic information in an

implementation-defined manner; however, conversions to and from string for

diagnostic NaNs should be reversible if possible. If an implementation does not

support diagnostic information on NaNs, these digits should be ignored where

necessary. A plain 'NaN' is usually the same as 'NaN0'.

Drivers MAY choose to support signed NaN (sNaN), along with sNaN with

diagnostic information.

Examples::

Some numeric strings are:

"0" -- zero

"12" -- a whole number

"-76" -- a signed whole number

"12.70" -- some decimal places

"+0.003" -- a plus sign is allowed, too

"017." -- the same as 17

".5" -- the same as 0.5

"4E+9" -- exponential notation

"0.73e-7" -- exponential notation, negative power

"Inf" -- the same as Infinity

"-infinity" -- the same as -Infinity

"NaN" -- not-a-Number

Notes:

1. A single period alone or with a sign is not a valid numeric string.

2. A sign alone is not a valid numeric string.

3. Significant (after the decimal point) and insignificant leading zeros

are permitted.

To String Representation

For finite numbers, we will use the definition at https://speleotrove.com/decimal/daconvs.html. It has been copied here:

The coefficient is first converted to a string in base ten using the characters

0 through 9 with no leading zeros (except if its value is zero, in which case a

single 0 character is used).

Next, the adjusted exponent is calculated; this is the exponent, plus the

number of characters in the converted coefficient, less one. That is,

exponent+(clength-1), where clength is the length of the coefficient in decimal

digits.

If the exponent is less than or equal to zero and the adjusted exponent is

greater than or equal to -6, the number will be converted to a character form

without using exponential notation. In this case, if the exponent is zero then

no decimal point is added. Otherwise (the exponent will be negative), a decimal

point will be inserted with the absolute value of the exponent specifying the

number of characters to the right of the decimal point. '0' characters are

added to the left of the converted coefficient as necessary. If no character

precedes the decimal point after this insertion then a conventional '0'

character is prefixed.

Otherwise (that is, if the exponent is positive, or the adjusted exponent is

less than -6), the number will be converted to a character form using

exponential notation. In this case, if the converted coefficient has more than

one digit a decimal point is inserted after the first digit. An exponent in

character form is then suffixed to the converted coefficient (perhaps with

inserted decimal point); this comprises the letter 'E' followed immediately by

the adjusted exponent converted to a character form. The latter is in base ten,

using the characters 0 through 9 with no leading zeros, always prefixed by a

sign character ('-' if the calculated exponent is negative, '+' otherwise).

This corresponds to the following code snippet:

var adjusted_exponent = _exponent + (clength - 1);

if (_exponent > 0 || adjusted_exponent < -6) {

// exponential notation

} else {

// character form without using exponential notation

}

For special numbers such as infinity or the not a number (NaN) variants, the below table is used:

| Value | String |

|---|---|

| Positive Infinite | Infinity |

| Negative Infinite | -Infinity |

| Positive NaN | NaN |

| Negative NaN | NaN |

| Signaled NaN | NaN |

| Negative Signaled NaN | NaN |

| NaN with a payload | NaN |

Finally, there are certain other invalid representations that must be treated as zeros, as per IEEE 754-2008. The

tests will verify that each special value has been accounted for.

The server log files as well as the Extended JSON Format for Decimal128 use this format.

Motivation for Change

BSON already contains support for double ("\x01"), but this type is insufficient for certain values that require

strict precision and representation, such as money, where it is necessary to perform exact decimal rounding.

The new BSON type is the 128-bit IEEE 754-2008 decimal floating point number, which is specifically designed to cope

with these issues.

Design Rationale

For simplicity and consistency between drivers, drivers must not automatically convert this type into a native type by default. This also ensures original data preservation, which is crucial to Decimal128. It is however recommended that drivers offer a way to convert the Value Object to a native type through accessors, and to create a new BSON type from native types. This forces the user to explicitly do the conversion and thus understand the difference between the MongoDB type and possible language precision and representation. Representations via conversions done outside MongoDB are not guaranteed to be identical.

Backwards Compatibility

There should be no backwards compatibility concerns. This specification merely deals with how to encode and decode BSON/Extended JSON Decimal128.

Reference Implementations

Tests

See the BSON Corpus for tests.

Most of the tests are converted from the General Decimal Arithmetic Testcases.

Q&A

-

Is it true Decimal128 doesn’t normalize the value?

- Yes. As a result of non-normalization rules of the Decimal128 data type, precision is represented exactly. For example, ‘2.00’ always remains stored as 200E-2 in Decimal128, and it differs from the representation of ‘2.0’ (20E-1). These two values compare equally, but represent different ideas.

-

How does Decimal128 “2.000” look in the shell?

- NumberDecimal(“2.000”)

-

Should a driver avoid sending Decimal128 values to pre-3.4 servers?

- No

-

Is there a wire version bump or something for Decimal128?

- No

Changelog

- 2024-02-08: Migrated from reStructuredText to Markdown.

- 2022-10-05: Remove spec front matter.

BSON Binary UUID

- Status: Accepted

- Minimum Server Version: N/A

Abstract

The Java, C#, and Python drivers natively support platform types for UUID, all of which by default encode them to and decode them from BSON binary subtype 3. However, each encode the bytes in a different order from the others. To improve interoperability, BSON binary subtype 4 was introduced and defined the byte order according to RFC 4122, and a mechanism to configure each driver to encode UUIDs this way was added to each driver. The legacy representation remained as the default for each driver.

This specification moves MongoDB drivers further towards the standard UUID representation by requiring an application relying on native UUID support to explicitly specify the representation it requires.

Drivers that support native UUID types will additionally create helpers on their BsonBinary class that will aid in conversion to and from the platform native UUID type.

META

The keywords “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119.

Specification

Terms

UUID

A Universally Unique IDentifier

BsonBinary

An object that wraps an instance of a BSON binary value

Naming Deviations

All drivers MUST name operations, objects, and parameters as defined in the following sections.

The following deviations are permitted:

- Drivers can use the platform’s name for a UUID. For instance, in C# the platform class is Guid, whereas in Java it is UUID.

- Drivers can use a “to” prefix instead of an “as” prefix for the BsonBinary method names.

Explicit encoding and decoding

Any driver with a native UUID type MUST add the following UuidRepresentation enumeration, and associated methods to its BsonBinary (or equivalent) class:

/**

enum UuidRepresentation {

/**

* An unspecified representation of UUID. Essentially, this is the null

* representation value. This value is not required for languages that

* have better ways of indicating, or preventing use of, a null value.

*/

UNSPECIFIED("unspecified"),

/**

* The canonical representation of UUID according to RFC 4122,

* section 4.1.2

*

* It encodes as BSON binary subtype 4

*/

STANDARD("standard"),

/**

* The legacy representation of UUID used by the C# driver.

*

* In this representation the order of bytes 0-3 are reversed, the

* order of bytes 4-5 are reversed, and the order of bytes 6-7 are

* reversed.

*

* It encodes as BSON binary subtype 3

*/

C_SHARP_LEGACY("csharpLegacy"),

/**

* The legacy representation of UUID used by the Java driver.

*

* In this representation the order of bytes 0-7 are reversed, and the

* order of bytes 8-15 are reversed.

*

* It encodes as BSON binary subtype 3

*/

JAVA_LEGACY("javaLegacy"),

/**

* The legacy representation of UUID used by the Python driver.

*

* As with STANDARD, this representation conforms with RFC 4122, section

* 4.1.2

*

* It encodes as BSON binary subtype 3

*/

PYTHON_LEGACY("pythonLegacy")

}

class BsonBinary {

/*

* Construct from a UUID using the standard UUID representation

* [Specification] This constructor SHOULD be included but MAY be

* omitted if it creates backwards compatibility issues

*/

constructor(Uuid uuid)

/*

* Construct from a UUID using the given UUID representation.

*

* The representation must not be equal to UNSPECIFIED

*/

constructor(Uuid uuid, UuidRepresentation representation)

/*

* Decode a subtype 4 binary to a UUID, erroring when the subtype is not 4.

*/

Uuid asUuid()

/*

* Decode a subtype 3 or 4 to a UUID, according to the UUID

* representation, erroring when subtype does not match the

* representation.

*/

Uuid asUuid(UuidRepresentation representation)

}

Implicit decoding and encoding

A new driver for a language with a native UUID type MUST NOT implicitly encode from or decode to the native UUID type. Rather, explicit conversion MUST be used as described in the previous section.

Drivers that already do such implicit encoding and decoding SHOULD support a URI option, uuidRepresentation, which controls the default behavior of the UUID codec. Alternatively, a driver MAY specify the UUID representation via global state.

| Value | Default? | Encode to | Decode subtype 4 to | Decode subtype 3 to |

|---|---|---|---|---|

| unspecified | yes | raise error | BsonBinary | BsonBinary |

| standard | no | BSON binary subtype 4 | native UUID | BsonBinary |

| csharpLegacy | no | BSON binary subtype 3 with C# legacy byte order | BsonBinary | native UUID |

| javaLegacy | no | BSON binary subtype 3 with Java legacy byte order | BsonBinary | native UUID |

| pythonLegacy | no | BSON binary subtype 3 with standard byte order | BsonBinary | native UUID |

For scenarios where the application makes the choice (e.g. a POJO with a field of type UUID), or when serializers are strongly typed and are constrained to always return values of a certain type, the driver will raise an exception in cases where otherwise it would be required to decode to a different type (e.g. BsonBinary instead of UUID or vice versa).

Note also that none of the above applies when decoding to strictly typed maps, e.g. a Map<String, BsonValue> like Java

or .NET’s BsonDocument class. In those cases the driver is always decoding to BsonBinary, and applications would use the

asUuid methods to explicitly convert from BsonBinary to UUID.

Implementation Notes

Since changing the default UUID representation can reasonably be considered a backwards-breaking change, drivers that implement the full specification should stage implementation according to semantic versioning guidelines. Specifically, support for this specification can be added to a minor release, but with several exceptions:

The default UUID representation should be left as is (e.g. JAVA_LEGACY for the Java driver) rather than be changed to UNSPECIFIED. In a subsequent major release, the default UUID representation can be changed to UNSPECIFIED (along with appropriate documentation indicating the backwards-breaking change). Drivers MUST document this in a prior minor release.

Test Plan

The test plan consists of a series of prose tests. They all operate on the same UUID, with the String representation of “00112233-4455-6677-8899-aabbccddeeff”.

Explicit encoding

- Create a BsonBinary instance with the given UUID

- Assert that the BsonBinary instance’s subtype is equal to 4 and data equal to the hex-encoded string “00112233445566778899AABBCCDDEEFF”

- Create a BsonBinary instance with the given UUID and UuidRepresentation equal to STANDARD

- Assert that the BsonBinary instance’s subtype is equal to 4 and data equal to the hex-encoded string “00112233445566778899AABBCCDDEEFF”

- Create a BsonBinary instance with the given UUID and UuidRepresentation equal to JAVA_LEGACY

- Assert that the BsonBinary instance’s subtype is equal to 3 and data equal to the hex-encoded string “7766554433221100FFEEDDCCBBAA9988”

- Create a BsonBinary instance with the given UUID and UuidRepresentation equal to CSHARP_LEGACY

- Assert that the BsonBinary instance’s subtype is equal to 3 and data equal to the hex-encoded string “33221100554477668899AABBCCDDEEFF”

- Create a BsonBinary instance with the given UUID and UuidRepresentation equal to PYTHON_LEGACY

- Assert that the BsonBinary instance’s subtype is equal to 3 and data equal to the hex-encoded string “00112233445566778899AABBCCDDEEFF”

- Create a BsonBinary instance with the given UUID and UuidRepresentation equal to UNSPECIFIED

- Assert that an error is raised

Explicit Decoding

- Create a BsonBinary instance with subtype equal to 4 and data equal to the hex-encoded string

“00112233445566778899AABBCCDDEEFF”

- Assert that a call to BsonBinary.asUuid() returns the given UUID

- Assert that a call to BsonBinary.asUuid(STANDARD) returns the given UUID

- Assert that a call to BsonBinary.asUuid(UNSPECIFIED) raises an error

- Assert that a call to BsonBinary.asUuid(JAVA_LEGACY) raises an error

- Assert that a call to BsonBinary.asUuid(CSHARP_LEGACY) raises an error

- Assert that a call to BsonBinary.asUuid(PYTHON_LEGACY) raises an error

- Create a BsonBinary instance with subtype equal to 3 and data equal to the hex-encoded string

“7766554433221100FFEEDDCCBBAA9988”

- Assert that a call to BsonBinary.asUuid() raises an error

- Assert that a call to BsonBinary.asUuid(STANDARD) raised an error

- Assert that a call to BsonBinary.asUuid(UNSPECIFIED) raises an error

- Assert that a call to BsonBinary.asUuid(JAVA_LEGACY) returns the given UUID

- Create a BsonBinary instance with subtype equal to 3 and data equal to the hex-encoded string

“33221100554477668899AABBCCDDEEFF”

- Assert that a call to BsonBinary.asUuid() raises an error

- Assert that a call to BsonBinary.asUuid(STANDARD) raised an error

- Assert that a call to BsonBinary.asUuid(UNSPECIFIED) raises an error

- Assert that a call to BsonBinary.asUuid(CSHARP_LEGACY) returns the given UUID

- Create a BsonBinary instance with subtype equal to 3 and data equal to the hex-encoded string

“00112233445566778899AABBCCDDEEFF”

- Assert that a call to BsonBinary.asUuid() raises an error

- Assert that a call to BsonBinary.asUuid(STANDARD) raised an error

- Assert that a call to BsonBinary.asUuid(UNSPECIFIED) raises an error

- Assert that a call to BsonBinary.asUuid(PYTHON_LEGACY) returns the given UUID

Implicit encoding

- Set the uuidRepresentation of the client to “javaLegacy”. Insert a document with an “_id” key set to the given

native UUID value.

- Assert that the actual value inserted is a BSON binary with subtype 3 and data equal to the hex-encoded string “7766554433221100FFEEDDCCBBAA9988”

- Set the uuidRepresentation of the client to “charpLegacy”. Insert a document with an “_id” key set to the given

native UUID value.

- Assert that the actual value inserted is a BSON binary with subtype 3 and data equal to the hex-encoded string “33221100554477668899AABBCCDDEEFF”

- Set the uuidRepresentation of the client to “pythonLegacy”. Insert a document with an “_id” key set to the given

native UUID value.

- Assert that the actual value inserted is a BSON binary with subtype 3 and data equal to the hex-encoded string “00112233445566778899AABBCCDDEEFF”

- Set the uuidRepresentation of the client to “standard”. Insert a document with an “_id” key set to the given native

UUID value.

- Assert that the actual value inserted is a BSON binary with subtype 4 and data equal to the hex-encoded string “00112233445566778899AABBCCDDEEFF”

- Set the uuidRepresentation of the client to “unspecified”. Insert a document with an “_id” key set to the given

native UUID value.

- Assert that a BSON serialization exception is thrown

Implicit Decoding

-

Set the uuidRepresentation of the client to “javaLegacy”. Insert a document containing two fields. The “standard” field should contain a BSON Binary created by creating a BsonBinary instance with the given UUID and the STANDARD UuidRepresentation. The “legacy” field should contain a BSON Binary created by creating a BsonBinary instance with the given UUID and the JAVA_LEGACY UuidRepresentation. Find the document.

- Assert that the value of the “standard” field is of type BsonBinary and is equal to the inserted value.

- Assert that the value of the “legacy” field is of the native UUID type and is equal to the given UUID

Repeat this test with the uuidRepresentation of the client set to “csharpLegacy” and “pythonLegacy”.

-

Set the uuidRepresentation of the client to “standard”. Insert a document containing two fields. The “standard” field should contain a BSON Binary created by creating a BsonBinary instance with the given UUID and the STANDARD UuidRepresentation. The “legacy” field should contain a BSON Binary created by creating a BsonBinary instance with the given UUID and the PYTHON_LEGACY UuidRepresentation. Find the document.

- Assert that the value of the “standard” field is of the native UUID type and is equal to the given UUID

- Assert that the value of the “legacy” field is of type BsonBinary and is equal to the inserted value.

-

Set the uuidRepresentation of the client to “unspecified”. Insert a document containing two fields. The “standard” field should contain a BSON Binary created by creating a BsonBinary instance with the given UUID and the STANDARD UuidRepresentation. The “legacy” field should contain a BSON Binary created by creating a BsonBinary instance with the given UUID and the PYTHON_LEGACY UuidRepresentation. Find the document.

- Assert that the value of the “standard” field is of type BsonBinary and is equal to the inserted value

- Assert that the value of the “legacy” field is of type BsonBinary and is equal to the inserted value.

Repeat this test with the uuidRepresentation of the client set to “csharpLegacy” and “pythonLegacy”.

Note: the assertions will be different in the release prior to the major release, to avoid breaking changes. Adjust accordingly!

Q & A

What’s the rationale for the deviations allowed by the specification?

In short, the C# driver has existing behavior that make it infeasible to work the same as other drivers.

The C# driver has a global serialization registry. Since it’s global and not per-MongoClient, it’s not feasible to override the UUID representation on a per-MongoClient basis, since doing so would require a per-MongoClient registry. Instead, the specification allows for a global override so that the C# driver can implement the specification.

Additionally, the C# driver has an existing configuration parameter that controls the behavior of BSON readers and writers at a level below the serializers. This configuration affects the semantics of the existing BsonBinary class in a way that doesn’t allow for the constructor(UUID) mentioned in the specification. For this reason, that constructor is specified as optional.

Changelog

- 2024-08-01: Migrated from reStructuredText to Markdown.

- 2022-10-05: Remove spec front matter.

DBRef

- Status: Accepted

- Minimum Server Version: N/A

Abstract

DBRefs are a convention for expressing a reference to another document as an embedded document (i.e. BSON type 0x03). Several drivers provide a model class for encoding and/or decoding DBRef documents. This specification will both define the structure of a DBRef and provide guidance for implementing model classes in drivers that choose to do so.

META

The keywords “MUST”, “MUST NOT”, “REQUIRED”, “SHALL”, “SHALL NOT”, “SHOULD”, “SHOULD NOT”, “RECOMMENDED”, “MAY”, and “OPTIONAL” in this document are to be interpreted as described in RFC 2119.

This specification presents documents as Extended JSON for readability and expressing special types (e.g. ObjectId). Although JSON fields are unordered, the order of fields presented herein should be considered pertinent. This is especially relevant for the Test Plan.

Specification

DBRef Structure

A DBRef is an embedded document with the following fields:

$ref: required string field. Contains the name of the collection where the referenced document resides. This MUST be the first field in the DBRef.$id: required field. Contains the value of the_idfield of the referenced document. This MUST be the second field in the DBRef.$db: optional string field. Contains the name of the database where the referenced document resides. If specified, this MUST be the third field in the DBRef. If omitted, the referenced document is assumed to reside in the same database as the DBRef.- Extra, optional fields may follow after

$idor$db(if specified). There are no inherent restrictions on extra field names; however, older server versions may impose their own restrictions (e.g. no dots or dollars).

DBRefs have no relation to the deprecated DBPointer BSON type (i.e. type 0x0C).

Examples of Valid DBRefs

The following examples are all valid DBRefs:

// Basic DBRef with only $ref and $id fields

{ "$ref": "coll0", "$id": { "$oid": "60a6fe9a54f4180c86309efa" } }

// DBRef $id is not necessarily an ObjectId

{ "$ref": "coll0", "$id": 1 }

// DBRef with optional $db field

{ "$ref": "coll0", "$id": 1, "$db": "db0" }

// DBRef with extra, optional fields (with or without $db)

{ "$ref": "coll0", "$id": 1, "$db": "db0", "foo": "bar" }

{ "$ref": "coll0", "$id": 1, "foo": true }

// Extra field names have no inherent restrictions

{ "$ref": "coll0", "$id": 1, "$foo": "bar" }

{ "$ref": "coll0", "$id": 1, "foo.bar": 0 }

Examples of Invalid DBRefs

The following examples are all invalid DBRefs:

// Required fields are omitted

{ "$ref": "coll0" }

{ "$id": { "$oid": "60a6fe9a54f4180c86309efa" } }

// Invalid types for $ref or $db

{ "$ref": true, "$id": 1 }

{ "$ref": "coll0", "$id": 1, "$db": 1 }

// Fields are out of order

{ "$id": 1, "$ref": "coll0" }

Implementing a DBRef Model

Drivers MAY provide a model class for encoding and/or decoding DBRef documents. For those drivers that do, this section defines expected behavior of that class. This section does not prohibit drivers from implementing additional functionality, provided it does not conflict with any of these guidelines.

Constructing a DBRef model

Drivers MAY provide an API for constructing a DBRef model directly from its constituent parts. If so:

- Drivers MUST solicit a string value for

$ref. - Drivers MUST solicit an arbitrary value for

$id. Drivers SHOULD NOT enforce any restrictions on this value; however, this may be necessary if the driver is unable to differentiate between certain BSON types (e.g.null,undefined) and the parameter being unspecified. - Drivers SHOULD solicit an optional string value for

$db. - Drivers MUST require

$refand$db(if specified) to be strings but MUST NOT enforce any naming restrictions on the string values. - Drivers MAY solicit extra, optional fields.

Decoding a BSON document to a DBRef model

Drivers MAY support explicit and/or implicit decoding. An example of explicit decoding might be a DBRef model constructor that takes a BSON document. An example of implicit decoding might be configuring the driver’s BSON codec to automatically convert embedded documents that comply with the DBRef Structure into a DBRef model.

Drivers that provide implicit decoding SHOULD provide some way for applications to opt out and allow DBRefs to be decoded like any other embedded document.

When decoding a BSON document to a DBRef model:

- Drivers MUST require

$refand$idto be present. - Drivers MUST require

$refand$db(if present) to be strings but MUST NOT enforce any naming restrictions on the string values. - Drivers MUST accept any BSON type for

$idand MUST NOT enforce any restrictions on its value. - Drivers MUST preserve extra, optional fields (beyond

$ref,$id, and$db) and MUST provide some way to access those fields via the DBRef model. For example, an accessor method that returns the original BSON document (including$ref, etc.) would fulfill this requirement.

If a BSON document cannot be implicitly decoded to a DBRef model, it MUST be left as-is (like any other embedded document). If a BSON document cannot be explicitly decoded to a DBRef model, the driver MUST raise an error.

Since DBRefs are a special type of embedded document, a DBRef model class used for decoding SHOULD inherit the class used to represent an embedded document (e.g. Hash in Ruby). This will allow applications to always expect an instance of a common class when decoding an embedded document (if desired) and should also support the requirement for DBRef models to provide access to any extra, optional fields.

Encoding a DBRef model to a BSON document

Drivers MAY support explicit and/or implicit encoding. An example of explicit encoding might be a DBRef method that returns its corresponding representation as a BSON document. An example of implicit encoding might be configuring the driver’s BSON codec to automatically convert DBRef models to the corresponding BSON document representation as needed.

If a driver supports implicit decoding of BSON to a DBRef model, it SHOULD also support implicit encoding. Doing so will allow applications to more easily round-trip DBRefs through the driver.

When encoding a DBRef model to BSON document:

- Drivers MUST encode all fields in the order defined in DBRef Structure.

- Drivers MUST encode

$refand$id. If$dbwas specified, it MUST be encoded after$id. If any extra, optional fields were specified, they MUST be encoded after$idor$db. - If the DBRef includes any extra, optional fields after

$idor$db, drivers SHOULD attempt to preserve the original order of those fields relative to one another.

Test Plan

The test plan consists of a series of prose tests. These tests are only relevant to drivers that provide a DBRef model class.

The documents in these tests are presented as Extended JSON for readability; however, readers should consider the field order pertinent when translating to BSON (or their language equivalent). These tests are not intended to exercise a driver’s Extended JSON parser. Implementations SHOULD construct the documents directly using native BSON types (e.g. Document, ObjectId).

Decoding

These tests are only relevant to drivers that allow decoding into a DBRef model. Drivers SHOULD implement these tests for both explicit and implicit decoding code paths as needed.

-

Valid documents MUST be decoded to a DBRef model. For each of the following:

{ "$ref": "coll0", "$id": { "$oid": "60a6fe9a54f4180c86309efa" } }{ "$ref": "coll0", "$id": 1 }{ "$ref": "coll0", "$id": null }{ "$ref": "coll0", "$id": 1, "$db": "db0" }

Assert that each document is successfully decoded to a DBRef model. Assert that the

$ref,$id, and$db(if applicable) fields have their expected value. -

Valid documents with extra fields MUST be decoded to a DBRef model and the model MUST provide some way to access those extra fields. For each of the following:

{ "$ref": "coll0", "$id": 1, "$db": "db0", "foo": "bar" }{ "$ref": "coll0", "$id": 1, "foo": true, "bar": false }{ "$ref": "coll0", "$id": 1, "meta": { "foo": 1, "bar": 2 } }{ "$ref": "coll0", "$id": 1, "$foo": "bar" }{ "$ref": "coll0", "$id": 1, "foo.bar": 0 }

Assert that each document is successfully decoded to a DBRef model. Assert that the

$ref,$id, and$db(if applicable) fields have their expected value. Assert that it is possible to access all extra fields and that those fields have their expected value. -

Documents with out of order fields that are otherwise valid MUST be decoded to a DBRef model. For each of the following:

{ "$id": 1, "$ref": "coll0" }{ "$db": "db0", "$ref": "coll0", "$id": 1 }{ "foo": 1, "$id": 1, "$ref": "coll0" }{ "foo": 1, "$ref": "coll0", "$id": 1, "$db": "db0" }{ "foo": 1, "$ref": "coll0", "$id": 1, "$db": "db0", "bar": 1 }

Assert that each document is successfully decoded to a DBRef model. Assert that the

$ref,$id,$db(if applicable), and any extra fields (if applicable) have their expected value. -

Documents missing required fields MUST NOT be decoded to a DBRef model. For each of the following: